In the world of Kubernetes, scaling a real-time data pipeline using Kafka comes with unique challenges, like processing millions of Kafka messages spread across various Kafka topics efficiently. Today, we’ll look into using it to scale and streamline a very popular Asian app called Grab.

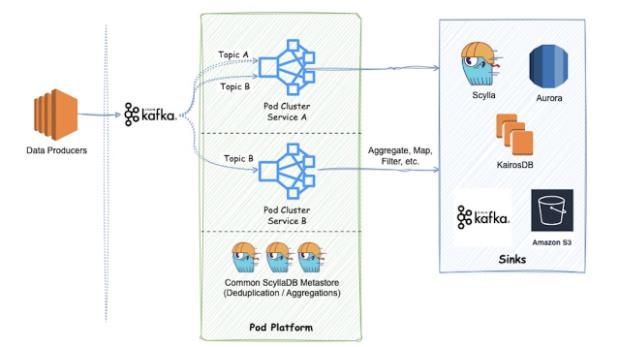

Grab is a data processing platform on Kubernetes that consumes millions of messages streamed across thousands of Kafka topics. Grab operates as deployments in a Kubernetes cluster, and each deployment consumes data from specific Kafka topic partitions. The load on a pod depends on the partition it consumes. Let’s imagine that a customer orders a meal using Grab.

The data, which is generated by the platform, needs to go through certain data engineering changes, like aggregation before it can be used by other teams. This is where Grab’s generic platform kicks in. The users design their logic, their own business case data transformation on this platform in a very generic way, and then use this data. For instance, this order data might be used by analysts to improve the customer experience.

In a nutshell, the Kubernetes cluster is running Grab deployments consuming Kafka and the data being produced by various service teams. Then this data, after transformation, goes to predefined stores like Scylla, Cairos, and MySQL for real-time as well as offline use cases.

Kafka Consumers on Kubernetes: Optimization Challenges

The idea of running Kafka consumers on Kubernetes comes with challenges.

These challenges include:

- The goal of Grab’s infrastructure is to ensure scalability and availability. Building a scalable but cost-effective solution is a major challenge.

- The second challenge is to balance the load across all the pods in the deployment. Kafka consumers directly consume from the partitions of a topic to ensure that the load across each part of the deployment is the same to avoid situations like noisy neighbor problems or pod-level throttling, which can be quite problematic.

- Maintaining data freshness is also a priority. In simple terms, data freshness basically means that once the data has been generated by the producer, it can be used after it goes through the platform. This is generally controlled or basically identified by the consumer lag of a certain pipeline. This is crucial for the system.

- Grab’s philosophy is to provide a good user experience to the service teams, and we don’t want them to actually do a lot of resource tuning for their particular pipelines. The goal is to abstract that information so that they can just focus on their pipeline logic rather than worrying about infrastructure scalability.

Strategies to Overcome Optimization Challenges

Grab’s current infrastructure was designed by trying various available solutions. Let’s take dive deep into the approaches considered before arriving at the current architecture.

The Vertical Pod Autoscaler Approach

The platform optimization journey begins with the vertical pod autoscaler, also known as VPA. The vertical pod autoscaler scales the application vertically rather than horizontally. Initially, memory and CPU metrics were used for VPA scaling. This demonstrates the VPA’s general functionality, where it changes the size of the pod. The consumers are Kafka consumers, and each pod connects to a single partition.

To begin with, the number of replicas in the deployment is kept the same as the number of partitions that particular deployment is consuming. After this, VPA takes over to decide the right size of the deployments based on the resource requirements for stability.

This particular setup helped to provide a good abstraction for the end users because they don’t have to configure any resources or even tune when there’s a change in the traffic. Over a period of time, the pod would automatically resize with the changing business trends, and there was no requirement from the platform team or from the end user to intervene.